This text describes an experience I shared with students and teachers during a workshop I co-organized at Bliiida (a Third Place in Metz) with the École Supérieure d'Art de Lorraine, where I teach. The workshop was entitled Vivre en espaces potentiels (Living in Potential Spaces) and took as its starting point a 3D scanner technology kindly lent by the 3D Jungle company, whose machines are installed in the Third Place. In this context, I invited the students to take hold of this technology and try to understand it, to find its limits and possibilities, and to work on the theme of “potential spaces” - a term that here replaces its false synonym “virtual”.

However, this text is not an analysis of this theme and of the proposals made by the participants; I wish to focus here on the "physical" experience of the scanner. After all, it was in the midst of a shared dance with the machine that the forms were born. In the hybrid experience of shared sensoriality, a relationship is forged with the machine to obtain an exploitable 3D scan result - or at least one that delivers the expected result.

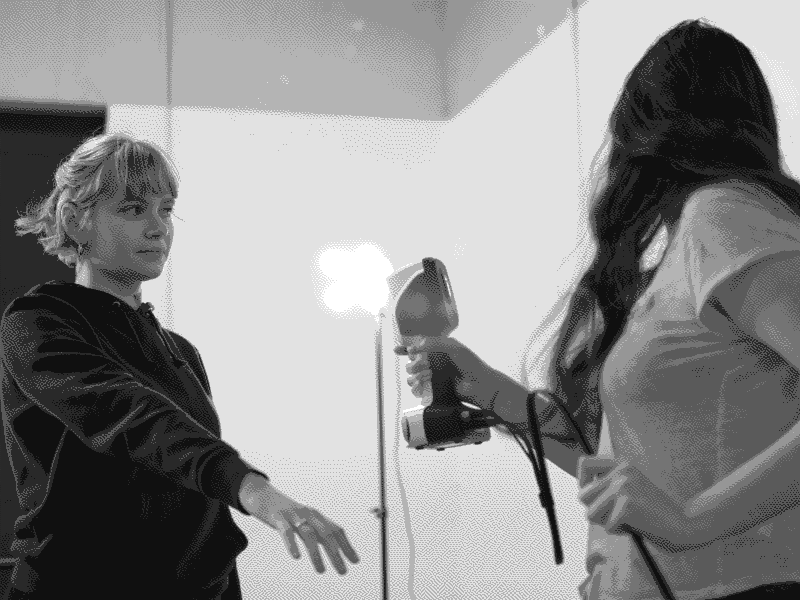

Let's start by describing the device (FIG. 01). The scanner is a sturdy, heavy, elongated plastic tool, with a tapered, hand-sized middle section, and domed upper and lower sections, including a double camera on the lower section, and an infra-red transmission and reception system on the upper section. This strange black-and-white “shower head” oscillates between being easy to handle thanks to its shape, and being strangely bulky thanks to its technology, which means it has to be used with great dexterity. The whole unit is connected by a long cable to a computer, which in turn is equipped with 3D shape retrieval software. In our case, the software was displayed on a large TV set, so as to ensure optimum visibility of what was being scanned by the device.

Once you've got the hang of it, how do you scan a shape? The entire process is guided in successive stages by the software. A calibration period using a dedicated test pattern kit, then a pre-visualization, before finally starting the scanning stage. This is where a strange walk begins, which has to be both precise and relatively fast, for two reasons: the longer the scan time, the more data there is to process - and therefore the greater the processing effort - but, above all, we were scanning bodies whose immobility could not be perfect. So we had to be as fast as possible to avoid movements and artifacts. This speed, however, requires a certain dexterity and a precise understanding of how the scanner locates itself in space. Once an initial zone has been scanned, it becomes the reference for positioning the rest of the shape. When the software loses its orientation, it has to return to this main area to calibrate itself again, so that scanning can resume. In other words, the larger the area (a bust, for example), the easier it is to return to capture it. A hand or a face, on the other hand, cannot be quickly re-calibrated, as these areas are too narrow and move easily.

In this choreography, the first strangeness for any novice to this type of technology is the loss of visual reference. You're not looking at the space you're moving through, but at the visualization offered by the software on a remote screen. This means that to scan, you don't have to look at what you're scanning, but at the device's vision, and only that vision. The physical space around you only reappears when the software no longer displays the scan properly, or to dodge the various pieces of equipment present in the space. To scan quickly and well, you need to learn to look through the device's eyes and understand how it calculates space. After two days of trying, we're well on the way to scanning shapes efficiently (“efficiently” being taken to mean obtaining the desired shape while keeping the file as lean as possible).

The second oddity is that the software stops scanning when it can no longer orient itself. In other words, you are not the only master of starting and stopping the scanner. Regularly, the scanner interrupts itself by requesting re-calibration on a previous zone. It also does this if it detects that a scanned zone has moved.

The software seems to be frustrating our desire to scan movement, or at least a series of postures within the same scan.

However, understanding the main calibration zone has enabled us to set up a ruse. Since the scanner only sees what's immediately in front of it, movement can take place outside this scan zone, but only on one condition: that the main calibration zone remains unchanged (let's take our torso as an example). With the exception of this area, everything else can move outside the device's field of vision. When we re-scan the space with a change, the software considers that it's a new area that it hadn't previously scanned, and adds the shape as a “second skin” to the first, allowing a kind of accumulation of superimposed shapes (FIG. 02.).

*

What does this experience tell us?

In my thesis, I wrote a chapter on “connected sensorialities” which describes - at least partially - this phenomenon (chapters 5.2 and 5.3). In a bid to better understand the terms “sense”, “sensoriality” and “sensitivity”, I developed a set of definitions for both humans and machines. In this sense, I quoted Merleau-Ponty:

“We must recognize under the name of gaze, hand and in general body a system of systems dedicated to the inspection of the world, capable of spanning distances, of piercing the perceptive future, of drawing in the inconceivable flatness of being hollows and reliefs, distances and gaps, a meaning...” (Maurice Merleau-Ponty, La prose du monde, p.110-111).

I insist on the phrase “a system of systems dedicated to inspecting the world”, in other words, a system made up of a set of senses that enable analysis of what surrounds the body or what makes it up. This is in line with the definition of sensoriality: “Sensitivity of a psychophysiological order; set of functions of the sensory system” (CNRTL). But Merleau-Ponty makes it clear that, for humans, this system of systems is dedicated to making meaning, playing with the ambiguity of the term between “feeling” and “making sense”. This is why we regard our bodies as semantic filters (the only ones imposed on us). Computer systems equipped with sensors work in a similar way, as a system of systems that analyze their surroundings (with different limitations from the human body) to translate the collected stimuli into calculated meaning.

However, “ making sense ” here involves a certain number of choices made by the program's developers, which will enable the desired information to be retrieved. The program designer is the one who makes the sensorial choices for his device.

It's here that the notion of “sensitive” can also shed some light, as it speaks to us of “emotional value” (Charles Lenay), linked to the notions of “sensible” and even “sentiment”, but also of sensors, as adjusting a sensor's sensitivity means setting the lowest value it will retrieve. For the human body, sensitivity is also defined as “the property of living matter to react in a specific way to the action of certain internal or external agents” (CNRTL). Unlike measuring devices, sensitivity is not equivalent to a precise quantification (such as a minimum quantity), but rather to an approximation felt by one factor compared to another. According to our definitions, sensoriality therefore encompasses the entire sensory system, which in turn is interpreted in terms of different sensitivities. In the human perceptive system, this sensitivity therefore has the same role as sensors in being more or less receptive to external information, but does not formulate it in the same way, and becomes more difficult to quantify. Nevertheless, the definition poses a problem for humans, since it asserts that living beings will react in a “specific and timely” way to external stimuli thanks to this sensitivity, which is obviously not always the case.

*

Underlying these definitions is a complex relationship between the human and machine sensory systems, and the way in which both produce meaning. It was in these interstices that the scanner's manipulation and perhaps even its complexity of use were played out. Forget your own sensory system and replace it with that of a machine that imposes its perception. Deeply understand the way the machine perceives, and precisely how its sensitivity works, in order to master and possibly thwart it. This reflection pushes the tool to its limit, extending it beyond the utilitarian object to make it a partner that can (co)produce fabulous things, but also pose many problems. Its interpretation no longer depends on our own, but on a blend of use and design; on the other hand, the result of the scan depends on our ability to collaborate with a machine-like, alien sensory system.

Antonin Jousse, Sensorialités connectées : Réévaluation de la notion d’interactivité dans le champ contemporain des arts numériques, thèse de doctorat, 2021.

Charles Lenay, « Corps touchant, corps touché : l’expérience de la sépara- tion », dans IMPEC : Interactions Multimodales Par ECran, thème : corps et écran, ENS de Lyon, 4-6 juillet 2018.

Maurice Merleau-Ponty, La prose du monde, Paris : Ed. Gallimard, 1969, 215 p.

FIG 01. Photography of the 3D scanner.

FIG 02. Image created by a student during a workshop on the theme of screaming. On the face, the double skin phenomenon is visible here.

FIG 03. Two students use the scanner.